Selenium How to send email out when the testcase failed in Unittest

Now, I am modifying my selenium test code for our banner application to add a function to send email out when a testcase failed.

I found a code here, and did some changes

https://stackoverflow.com/questions/4414234/getting-pythons-unittest-results-in-a-teardown-method/4415062

Add a new class to send message when the testcase fails.

import smtplib

from email.mime.text import MIMEText

from email.header import Header

from tools.config import config

import base64

class sendmail:

def __init__(self):

self.cf = config().getcf()

def send(self, msg):

self.send(msg, None)

def send(self, msg, pic):

try:

sender = self.cf.get('NOTICE', 'sender')

receivers = [self.cf.get('NOTICE', 'receivers')]

# message = MIMEText(msg, 'plain', 'utf-8')

message = MIMEMultipart()

message['From'] = Header('Selenium Test', 'utf-8')

message['To'] = Header('dba', 'utf-8')

subject = msg

message['Subject'] = Header(subject, 'utf-8')

if pic is not None:

img = MIMEText(base64.b64decode(pic), "base64", 'utf-8')

img["Content-Type"] = "application/octet-stream"

img["Content-Disposition"] = 'attachment; filename= "img.png"'

message.attach(img)

smtpObj = smtplib.SMTP('smtp.odu.edu')

smtpObj.sendmail(sender, receivers, message.as_string())

except smtplib.SMTPException:

print 'failed'

Override the tearDown function in the class which inherit unittest.TestCase

def list2reason(self, exc_list):

if exc_list and exc_list[-1][0] is self:

return exc_list[-1][1]

def tearDown(self):

if hasattr(self, '_outcome'): # Python 3.4+

result = self.defaultTestResult() # these 2 methods have no side effects

self._feedErrorsToResult(result, self._outcome.errors)

else: # Python 3.2 - 3.3 or 2.7

result = getattr(self, '_outcomeForDoCleanups', self._resultForDoCleanups)

error = self.list2reason(result.errors)

failure = self.list2reason(result.failures)

if error or failure:

typ, text = ('ERROR', error) if error else ('FAIL', failure)

msg = [x for x in text.split('\n')[1:] if not x.startswith(' ')][0]

ss = self.driver.get_screenshot_as_base64()

sendmail().send("%s at %s: [%s]" % (typ, self.id(), msg), ss)

else:

pass

opatch: Permission denied reported during 2019 July PSU Patching

Update July 26

Get feedback from Oracle, Using _JAVA_OPTIONS could change the position of tmp folder OPatch uses.

export _JAVA_OPTIONS="-Djava.io.tmpdir=<local path>"

=============================================================================

When I do Oracle database July 2019 PSU patching on the machines which /tmp folder is mounted with noexec option, error “opatch: Permission denied” reported.

$ORACLE_HOME/OPatch/opatch apply 29494060/ Oracle Interim Patch Installer version 12.2.0.1.17 Copyright (c) 2019, Oracle Corporation. All rights reserved. Oracle Home : /l01/app/oracle/product/12.1.0.2 Central Inventory : /l01/app/oraInventory from : /l01/app/oracle/product/12.1.0.2/oraInst.loc OPatch version : 12.2.0.1.17 OUI version : 12.1.0.2.0 Log file location : /l01/app/oracle/product/12.1.0.2/cfgtoollogs/opatch/opatch2019-07-19_11-21-09AM_1.log Verifying environment and performing prerequisite checks... -------------------------------------------------------------------------------- Start OOP by Prereq process. Launch OOP... /l01/app/oracle/product/12.1.0.2/OPatch/opatch: line 1395: /tmp/oracle-home-1563549677485107/OPatch/opatch: Permission denied /l01/app/oracle/product/12.1.0.2/OPatch/opatch: line 1395: exec: /tmp/oracle-home-1563549677485107/OPatch/opatch: cannot execute: Permission denied

But we don’t have this problem when did Apr 2019 PSU Patching.

Setting variables TMP / TEMP / TMPDIR / TEMPDIR is not workong

The simplest way to solve the problem is to remount /tmp without “noexec” option.

Oracle mentioned in Oracle Database 19c Release Update & Release Update Revision July 2019 Known Issues (Doc ID 2534815.1)

1 Known Issues 1.1 OOP Ignorable messages when running Opatch The message "Start OOP by Prereq process" and the message "Launch OOP..." prints on console during 19.4.0.0.190716 DB RU rollback using the specified Opatch 12.2.0.1.17 release. Both of these informational messages have no functional impact to the rollback flow and are printed in error. Please ignore them. The issue will be corrected with the next version of Opatch 12.2.0.1.18 release.

So, it is supposed to be an OPatch bug.

I checked the opatch script, and debug it in my test environment.

For Apr 2019 PSU patching, opatch script will invoke java program to patch directly. But for July 2019 PSU patching, it invokes the same java program to generate a shell file to do out-of-place opatch patching. It will copy itself to the /tmp/xxxxx/OPatch folder to do patching.

One way to prevent the OPatch goes to self patching OOP is to use “-restart” option.

$ORACLE_HOME/OPatch/opatch apply 29774383 -silent -restart

This option is undocumented. I am not sure whether I can use it safely, waiting for Oracle to confirm.

SSL from Banner Application cannot access Degree Application

Banner application has a module, get the data from DegreeWork application with SSL request.

In the past, It was not working because the domain name degree.xyz.edu cannot accept TLSv1.0 request, TLSv1.2 only, and Banner is only using v1.0 to request data. We set the firewall to allow TLSv1.0 request, the problem solved.

Last week, the problem happened again.

I used curl to test, we can get the page without any error.

Then, use openssl to test connection, TLSv1.0 is allowed without any restriction

# echo QUIT | openssl s_client -connect degree.odu.edu:8442 -tls1 CONNECTED(00000003) depth=0 C = US, postalCode = 23529, ST = VA, L = NNN, street = xxx, O = xxx University, OU = ITS, CN = degree.xyz.edu verify error:num=20:unable to get local issuer certificate verify return:1 depth=0 C = US, postalCode = 23529, ST = VA, L = NNN, street = xxx, O = xxx University, OU = ITS, CN = degree.xyz.edu verify error:num=21:unable to verify the first certificate verify return:1 --- Certificate chain 0 s:/C=US/postalCode=23529/ST=VA/L=NNN/street=xxx/O=xxx University/OU=ITS/CN=degree.xyz.edu i:/C=US/ST=MI/L=Ann Arbor/O=Internet2/OU=InCommon/CN=InCommon RSA Server CA --- …… ……

But, there is an error

verify error:num=20:unable to get local issuer certificate

verify error:num=21:unable to verify the first certificate

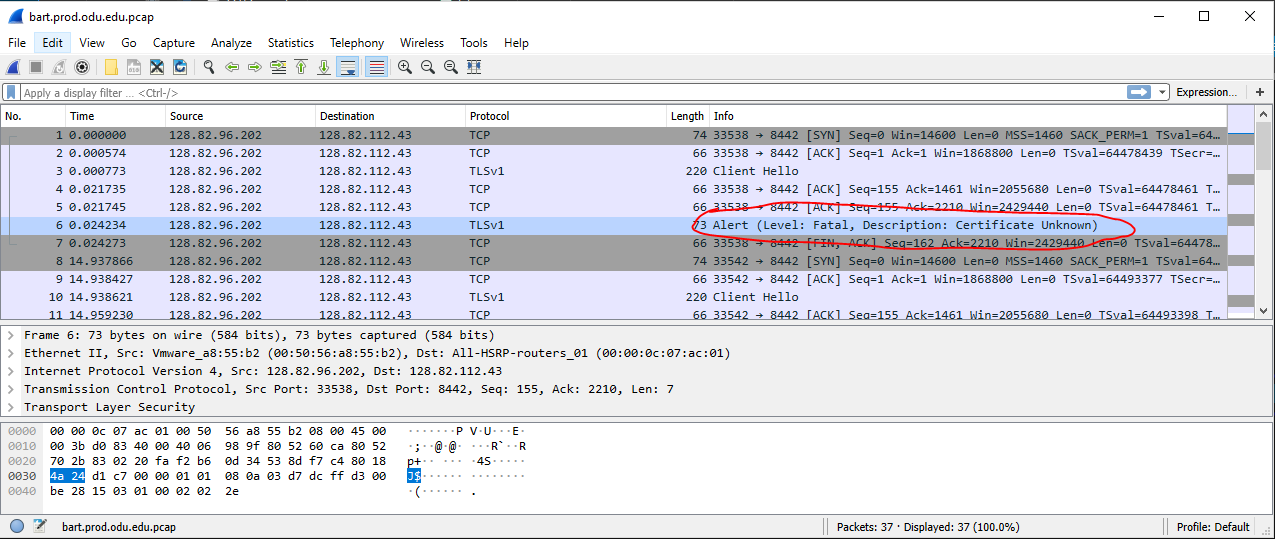

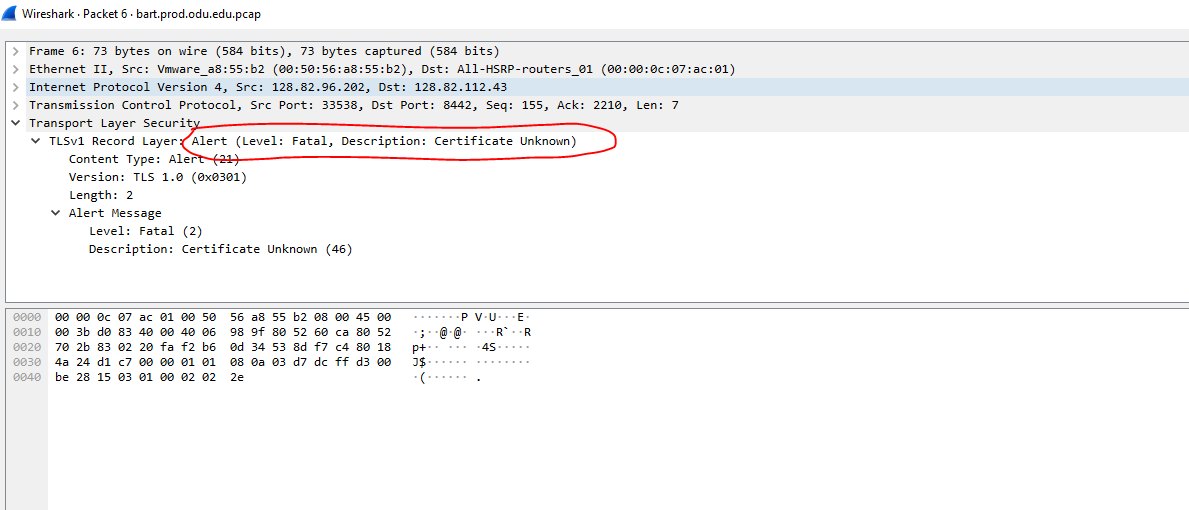

Then, I am using tcpdump to capture the communication

# nohup tcpdump -i eth0 -w /tmp/`hostname`.pcap -vnn '(src host 128.82.96.201 and dst host degree.xyz.edu)' or '(src host 128.82.96.201 and dst host 128.82.96.18)' or '(src host 128.82.96.201 and dst host 128.82.96.19)' &

We can see that there is an error in the handshake phase. It also shows that something wrong about the certificate.

I submitted a ticket to the network team, they found that this is the issue on the Firewall, during the SSL decryption process the firewall was not presenting the full chain. They uploaded the certificate as seplitted files for root / intermediate. It is not working. But if combine all certificates together in one file, the problem solved.

RabbitMQ Server Install in RHEL7

Before install RabbitMQ, we should install ERLANG which is the language used to code RabbitMQ.

We should be careful the compatibility between ERLANG and RabbitMQ.

https://www.rabbitmq.com/which-erlang.html#compatibility-matrix

If you encounter this kind of error

{"init terminating in do_boot",{undef,[{rabbit_prelaunch,start,[],[]},{init,start_em,1,[]},{init,do_boot,3,[]}]}}

Crash dump is being written to: /var/log/rabbitmq/erl_crash.dump...done

init terminating in do_boot ()

You should check whether your erlang and rabbitmq are fit.

The rpm of ERLANG can be downloaded here, find the version you need.

https://dl.bintray.com/rabbitmq-erlang/rpm/erlang/

The error information in “journalctl” is a misunderstanding, we can use “/usr/sbin/rabbitmq-server” to start RabbitMQ to see the internal errors.

K8S - Create a New User Account and Login from Remote

I want to create a user zhangqiaoc and assign it as the administrator for the namespace ns_zhangqiaoc

On the master node

1.create a key for zhangqiaoc and use cluster ca to sign it.

# openssl genrsa -out zhangqiaoc.key 2048 # openssl req -new -key zhangqiaoc.key -out zhangqiaoc.csr -subj "/CN=zhangqiaoc/O=management" # openssl x509 -req -in zhangqiaoc.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -out zhangqiaoc.crt -days 3650

2.create a namespace

# kubectl create namespace ns-zhangqiaoc

3.bind clusterRole admin with user zhangqiaoc

# kubectl create -f rolebinding.yml kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: admin-binding namespace: ns-zhangqiaoc # <-- in namespace ns-zhangqiaoc subjects: - kind: User name: zhangqiaoc apiGroup: "" roleRef: kind: ClusterRole name: admin # <-- as the administrator apiGroup: ""

4.copy the certificate and key of the user zhangqiaoc and ca certificate to the remote.

scp /root/zhangqiaoc.crt 83.16.16.73:/root scp /root/zhangqiaoc.key 83.16.16.73:/root scp /etc/kubernetes/pki/ca.crt 83.16.16.73:/root

On the kubectl client node

5.setup kubectl on the remote / client

# kubectl config set-cluster kubernetes --server=https://83.16.16.71:6443 --certificate-authority=/root/ca.crt # kubectl config set-credentials zhangqiaoc --client-certificate=/root/zhangqiaoc.crt --client-key=/root/zhangqiaoc.key # kubectl config set-context zhangqiaoc@kubernetes --cluster=kubernetes --namespace=ns-zhangqiaoc --user=zhangqiaoc # kubectl config use-context zhangqiaoc@kubernetes

6.Test

[root@k8s3 ~]# kubectl get pods

No resources found.

[root@k8s3 ~]# kubectl run mysql-client --image=mysql:latest --port=3306 --env="MYSQL_ALLOW_EMPTY_PASSWORD=1"

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/mysql-client created

# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/ca.crt

server: https://83.16.16.71:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

namespace: ns-zhangqiaoc

user: zhangqiaoc

name: zhangqiaoc@kubernetes

current-context: zhangqiaoc@kubernetes

kind: Config

preferences: {}

users:

- name: zhangqiaoc

user:

client-certificate: /root/zhangqiaoc.crt

client-key: /root/zhangqiaoc.key

We can also replace the certificate files to the certificate strings

[root@k8s3 ~]# cat zhangqiaoc.crt |base64 --wrap=0

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN1ekNDQWFNQ0NRRFFKVlNyT0lMWkp6QU5CZ2txaGtpRzl3MEJBUXNGQURBVk1STXdFUVlEVlFRREV3cHIKZFdKbGNtNWxkR1Z6TUI0WERURTVNRGN3TkRFMU5URXdNRm9YRFRJNU1EY3dNVEUxTlRFd01Gb3dLakVUTUJFRwpBMVVFQXd3S2VtaGhibWR4YVdGdll6RVRNQkVHQTFVRUNnd0tiV0Z1WVdkbGJXVnVkRENDQVNJd0RRWUpLb1pJCmh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTU83WWQrNlhvZVpla2F4VXRHUkhTdXpieG1pUTYzY3dWY0EKdC9IeUJ1U0dvTFBHR0thUCtsQ3dBaWxCeXZySUFCQzdrR0IzODFTS1FaVkIxZVpyZFFtTWRkSko1Zit5OGkrUQppR3dReitvaHFiZnB4ZnlaZVJiTTdVR2V1OTN5Vm1YcC9XUkYzbnJiNjRlZ1FKWW1xNU9hSGxKUkxRM1ZZeE5ECnFTalNFWFl6YXlaR3BucXpmeTJmNVM3dDJpUmpUdzB2ZkNtd3I5RXhaSlBkWFZtQjZudTJ6RStHQmlBRkZpd1EKc2hKV2V6aGc2SlZVWTJNT3IzdFJ2V1Rpa3hRb3FhWitING9iVzdpeXVSbDFFblJMMk9jNGlGTnRYSzRNeEZvZgozYitacXA5dnNxWm42SGpqelYxQkhVN0lHUEZvVmVtYXo2VU9sLy83Zm9WSDJMRE45cU1DQXdFQUFUQU5CZ2txCmhraUc5dzBCQVFzRkFBT0NBUUVBQ1hnODFsa3lVS3pVOTl5bUJ6RHZNUFR4ZFZXaDRuTzZhclZoN0lpU0RUdHAKVWdMKzRkRkR2Z29CQnlhTWVCN2lVQUNVc1cyakVvWHduN2JJcCtTeGVVWTFsbkJGbDBNOGFEVnp3MDg2ZzllWApQV3pydjV1eEhLSy8xV0FiT1ZISkRGMDJibU1oU08wVTZSQ2RoL2V3SzluQjVsNkE5OUxIUkxPc0JuUElId1JuCkJ1YTM1cDJRbnRNQUxaZUhicExSd3FGWUU1VG8yT25CMndTamlSbit6YkM2ZVdhY1FGSk5WZC9za3gvZHQ5UVUKWnU2bHQrUGJvL2htUmlrREswV2FNYjU5QzI3UkIxa2hNM1pma3AxRmRrWGtscDBxSHQ2QlBDb1V6dHU2dXo5dwppZm1heEExTWJFQTZLQm9UTlRWUHpvQ1IzSUhkUk9BYTVMd0dNOERXUWc9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

[root@k8s3 ~]# cat zhangqiaoc.key |base64 --wrap=0

LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb2dJQkFBS0NBUUVBdzd0aDM3cGVoNWw2UnJGUzBaRWRLN052R2FKRHJkekJWd0MzOGZJRzVJYWdzOFlZCnBvLzZVTEFDS1VISytzZ0FFTHVRWUhmelZJcEJsVUhWNW10MUNZeDEwa25sLzdMeUw1Q0liQkRQNmlHcHQrbkYKL0psNUZzenRRWjY3M2ZKV1plbjlaRVhlZXR2cmg2QkFsaWFyazVvZVVsRXREZFZqRTBPcEtOSVJkak5ySmthbQplck4vTFovbEx1M2FKR05QRFM5OEtiQ3YwVEZrazkxZFdZSHFlN2JNVDRZR0lBVVdMQkN5RWxaN09HRG9sVlJqCll3NnZlMUc5Wk9LVEZDaXBwbjRmaWh0YnVMSzVHWFVTZEV2WTV6aUlVMjFjcmd6RVdoL2R2NW1xbjIreXBtZm8KZU9QTlhVRWRUc2dZOFdoVjZaclBwUTZYLy90K2hVZllzTTMyb3dJREFRQUJBb0lCQURsVzZKcEJIN1k3dVAyQwpydzlqb3BjTnpzdEVwTzBIRWNDcUhqa0x2UWN2aFY2RTl1MjhtZ2tQTnVMZE9saHpSTW1pR082WjFUZjc5TENFCkErU25zRGFtNWxFL2d0aUFsTUJvWi82NGdpQkYwbEZsYzdISFNCanMyY2h5ZHZqVEtJcGNuUFhHSGlJQjBTTC8KU0V4MGNha2c2aWNWVHN5UnFaK1lIN01zcng3ZjkxcS9vOEtTdzc4QkZDODArQmtva1Z6ZGxOVVBYbENDd0tkYgo2ODhHVUxXbm1MNGQ1cGFVeDhBbDZZL21xRFVZMFZtSHVoNldNby96OWVPZ1M1ZXBQVkx3cXpqRkVqYmd6R21DCk0wbnlDblIrSVRwMU1Odzg0OWt4NWFPd0MyZXcvcEVmWFR3Qy9DSmNReDB0NUUyZEFjTnBHSGRZTFJSUFBYeEYKbFNoY1ZRa0NnWUVBK2h2dFpVanJTMGR2SnpvTUkwdXNhK01mNlZTNWQvTGhwbHhBNkxKMlhnZ1h3SVJnZWZZagpJNkp2c0tub1hRbGsyaHNpWENsVjdURTZCSEQ0c3pMSG9KeEk1Z3ZETFFxU3Q1bm90N0pmNGJ3RVgwZHd5RzFCCnJnS3NLTXhVR0d1ckVlM0RHb3ptQWhjYmQvK2lBeTR6Q2o4TDVScjR1M3B4Y2l4enZYaWZvL2NDZ1lFQXlGZVUKVkxuWlN1NGM5eE5qVGJnb082U2tMQWdFM2daOGJGVGJ2SnFKUUljeENQaDE0Q2xia0FwRVRFRWE3T3JtbFRNZgp3SDlqUmNMTWREaXN1TVd6S09maTN4aW52eWV3OFYrUXFnTlZocEd4MHUyR3NGREZVMmtLL3phSVptZEpFajdnCjJ5aFRMdWE1TlFSMi9VMGF4T2d3cFcvRXo4RW14QXNHQ1ZwRS83VUNnWUJlNFlWWHJTZ0Y4TjJNQmd0Z3dHNXkKcDBFTjVXUk95c2NyczBlMGZ5OUVVTkdoNlJZb2JtVzZPUDhpQi9Mc2lJbkg3QTlHNHkrRHdlNytqRlRzdGxEZwo3eWtBakduSWhvQk9Rb2IwV1NqaW04OFV6aWROQVpXdkM3aC82YlBsWjhNSUZDaTF3OG5sOVJvb2xjUENiUjVUCnZzTW1jT3IzUkdZUktDZm9Nd0JzMVFLQmdCVHN1TXhrb09Kbm5sVGNESW9vaXVNMzNnSFBVSnJUK0pqa0FCTmgKM0tZRnVNUmtGd096cmlHTVFQZnA4T0wvNGRlQmdIWjlsNlBJcGN3WncwaUZOYUkzSGdZSk1EUVI5RFF4dEExZAp6Y2dCWFo1WE9yTWRySTU2c1RCWXhNUlZVMWQ1ZzhqQUhIZ1FseFdIZ3RvUC9KVEdYNVpYNXlsLzFnbXgwUTZYCkJBL2xBb0dBSCtya3FnU2VhOXhCY2NJRnlobjlmWjdBSW5sSE05aTNvNk8wUTNBK1NLZFZKUzN4cjVpbUVYZFoKWlhvQXBBTGs3M1NnQjVhWXVsMWl6ZWJjSHJ0QnM5TThkZUVWNmpUSXcxSzNWc29mWlZJSmt0MWQ0WG8rNnc5egpiZ0g2eEF1OEErY1BCdmgzSUF4cGdwSDhPM0Q5aUdwOVVJejJ6dEFmR0VYVmtZc3E4MEU9Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

[root@k8s3 ~]# vi ~/.kube/config

...

users:

- name: zhangqiaoc

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN1ekNDQWFNQ0NRRFFKVlNyT0lMWkp6QU5CZ2txaGtpRzl3MEJBUXNGQURBVk1STXdFUVlEVlFRREV3cHIKZFdKbGNtNWxkR1Z6TUI0WERURTVNRGN3TkRFMU5URXdNRm9YRFRJNU1EY3dNVEUxTlRFd01Gb3dLakVUTUJFRwpBMVVFQXd3S2VtaGhibWR4YVdGdll6RVRNQkVHQTFVRUNnd0tiV0Z1WVdkbGJXVnVkRENDQVNJd0RRWUpLb1pJCmh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTU83WWQrNlhvZVpla2F4VXRHUkhTdXpieG1pUTYzY3dWY0EKdC9IeUJ1U0dvTFBHR0thUCtsQ3dBaWxCeXZySUFCQzdrR0IzODFTS1FaVkIxZVpyZFFtTWRkSko1Zit5OGkrUQppR3dReitvaHFiZnB4ZnlaZVJiTTdVR2V1OTN5Vm1YcC9XUkYzbnJiNjRlZ1FKWW1xNU9hSGxKUkxRM1ZZeE5ECnFTalNFWFl6YXlaR3BucXpmeTJmNVM3dDJpUmpUdzB2ZkNtd3I5RXhaSlBkWFZtQjZudTJ6RStHQmlBRkZpd1EKc2hKV2V6aGc2SlZVWTJNT3IzdFJ2V1Rpa3hRb3FhWitING9iVzdpeXVSbDFFblJMMk9jNGlGTnRYSzRNeEZvZgozYitacXA5dnNxWm42SGpqelYxQkhVN0lHUEZvVmVtYXo2VU9sLy83Zm9WSDJMRE45cU1DQXdFQUFUQU5CZ2txCmhraUc5dzBCQVFzRkFBT0NBUUVBQ1hnODFsa3lVS3pVOTl5bUJ6RHZNUFR4ZFZXaDRuTzZhclZoN0lpU0RUdHAKVWdMKzRkRkR2Z29CQnlhTWVCN2lVQUNVc1cyakVvWHduN2JJcCtTeGVVWTFsbkJGbDBNOGFEVnp3MDg2ZzllWApQV3pydjV1eEhLSy8xV0FiT1ZISkRGMDJibU1oU08wVTZSQ2RoL2V3SzluQjVsNkE5OUxIUkxPc0JuUElId1JuCkJ1YTM1cDJRbnRNQUxaZUhicExSd3FGWUU1VG8yT25CMndTamlSbit6YkM2ZVdhY1FGSk5WZC9za3gvZHQ5UVUKWnU2bHQrUGJvL2htUmlrREswV2FNYjU5QzI3UkIxa2hNM1pma3AxRmRrWGtscDBxSHQ2QlBDb1V6dHU2dXo5dwppZm1heEExTWJFQTZLQm9UTlRWUHpvQ1IzSUhkUk9BYTVMd0dNOERXUWc9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb2dJQkFBS0NBUUVBdzd0aDM3cGVoNWw2UnJGUzBaRWRLN052R2FKRHJkekJWd0MzOGZJRzVJYWdzOFlZCnBvLzZVTEFDS1VISytzZ0FFTHVRWUhmelZJcEJsVUhWNW10MUNZeDEwa25sLzdMeUw1Q0liQkRQNmlHcHQrbkYKL0psNUZzenRRWjY3M2ZKV1plbjlaRVhlZXR2cmg2QkFsaWFyazVvZVVsRXREZFZqRTBPcEtOSVJkak5ySmthbQplck4vTFovbEx1M2FKR05QRFM5OEtiQ3YwVEZrazkxZFdZSHFlN2JNVDRZR0lBVVdMQkN5RWxaN09HRG9sVlJqCll3NnZlMUc5Wk9LVEZDaXBwbjRmaWh0YnVMSzVHWFVTZEV2WTV6aUlVMjFjcmd6RVdoL2R2NW1xbjIreXBtZm8KZU9QTlhVRWRUc2dZOFdoVjZaclBwUTZYLy90K2hVZllzTTMyb3dJREFRQUJBb0lCQURsVzZKcEJIN1k3dVAyQwpydzlqb3BjTnpzdEVwTzBIRWNDcUhqa0x2UWN2aFY2RTl1MjhtZ2tQTnVMZE9saHpSTW1pR082WjFUZjc5TENFCkErU25zRGFtNWxFL2d0aUFsTUJvWi82NGdpQkYwbEZsYzdISFNCanMyY2h5ZHZqVEtJcGNuUFhHSGlJQjBTTC8KU0V4MGNha2c2aWNWVHN5UnFaK1lIN01zcng3ZjkxcS9vOEtTdzc4QkZDODArQmtva1Z6ZGxOVVBYbENDd0tkYgo2ODhHVUxXbm1MNGQ1cGFVeDhBbDZZL21xRFVZMFZtSHVoNldNby96OWVPZ1M1ZXBQVkx3cXpqRkVqYmd6R21DCk0wbnlDblIrSVRwMU1Odzg0OWt4NWFPd0MyZXcvcEVmWFR3Qy9DSmNReDB0NUUyZEFjTnBHSGRZTFJSUFBYeEYKbFNoY1ZRa0NnWUVBK2h2dFpVanJTMGR2SnpvTUkwdXNhK01mNlZTNWQvTGhwbHhBNkxKMlhnZ1h3SVJnZWZZagpJNkp2c0tub1hRbGsyaHNpWENsVjdURTZCSEQ0c3pMSG9KeEk1Z3ZETFFxU3Q1bm90N0pmNGJ3RVgwZHd5RzFCCnJnS3NLTXhVR0d1ckVlM0RHb3ptQWhjYmQvK2lBeTR6Q2o4TDVScjR1M3B4Y2l4enZYaWZvL2NDZ1lFQXlGZVUKVkxuWlN1NGM5eE5qVGJnb082U2tMQWdFM2daOGJGVGJ2SnFKUUljeENQaDE0Q2xia0FwRVRFRWE3T3JtbFRNZgp3SDlqUmNMTWREaXN1TVd6S09maTN4aW52eWV3OFYrUXFnTlZocEd4MHUyR3NGREZVMmtLL3phSVptZEpFajdnCjJ5aFRMdWE1TlFSMi9VMGF4T2d3cFcvRXo4RW14QXNHQ1ZwRS83VUNnWUJlNFlWWHJTZ0Y4TjJNQmd0Z3dHNXkKcDBFTjVXUk95c2NyczBlMGZ5OUVVTkdoNlJZb2JtVzZPUDhpQi9Mc2lJbkg3QTlHNHkrRHdlNytqRlRzdGxEZwo3eWtBakduSWhvQk9Rb2IwV1NqaW04OFV6aWROQVpXdkM3aC82YlBsWjhNSUZDaTF3OG5sOVJvb2xjUENiUjVUCnZzTW1jT3IzUkdZUktDZm9Nd0JzMVFLQmdCVHN1TXhrb09Kbm5sVGNESW9vaXVNMzNnSFBVSnJUK0pqa0FCTmgKM0tZRnVNUmtGd096cmlHTVFQZnA4T0wvNGRlQmdIWjlsNlBJcGN3WncwaUZOYUkzSGdZSk1EUVI5RFF4dEExZAp6Y2dCWFo1WE9yTWRySTU2c1RCWXhNUlZVMWQ1ZzhqQUhIZ1FseFdIZ3RvUC9KVEdYNVpYNXlsLzFnbXgwUTZYCkJBL2xBb0dBSCtya3FnU2VhOXhCY2NJRnlobjlmWjdBSW5sSE05aTNvNk8wUTNBK1NLZFZKUzN4cjVpbUVYZFoKWlhvQXBBTGs3M1NnQjVhWXVsMWl6ZWJjSHJ0QnM5TThkZUVWNmpUSXcxSzNWc29mWlZJSmt0MWQ0WG8rNnc5egpiZ0g2eEF1OEErY1BCdmgzSUF4cGdwSDhPM0Q5aUdwOVVJejJ6dEFmR0VYVmtZc3E4MEU9Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

[root@k8s3 ~]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/ca.crt

server: https://83.16.16.71:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

namespace: ns-zhangqiaoc

user: zhangqiaoc

name: zhangqiaoc@kubernetes

current-context: zhangqiaoc@kubernetes

kind: Config

preferences: {}

users:

- name: zhangqiaoc

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

On the master

7.Verify

[root@k8s1 ~]# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE default mysql-0 2/2 Running 0 2d13h default mysql-1 2/2 Running 0 2d13h default mysql-2 2/2 Running 0 2d13h default mysql-client-649f4f447-79c4r 1/1 Running 0 20h default nfs-provisioner-78fc579c97-9snrn 1/1 Running 0 2d13h default nfs-server-7b548776cd-xtcl2 1/1 Running 0 2d13h default ping-7dc8575788-h4qf4 1/1 Running 0 23h kube-system calico-kube-controllers-8464b48649-w4lvz 1/1 Running 0 2d13h kube-system calico-node-bbwwk 1/1 Running 0 2d13h kube-system calico-node-dprm2 1/1 Running 0 2d13h kube-system calico-node-kcmrw 1/1 Running 0 2d13h kube-system calico-node-lhsds 1/1 Running 0 2d13h kube-system coredns-fb8b8dccf-4p8jh 1/1 Running 0 21h kube-system coredns-fb8b8dccf-d2v8p 1/1 Running 0 21h kube-system etcd-k8s1.zhangqiaoc.com 1/1 Running 0 2d13h kube-system kube-apiserver-k8s1.zhangqiaoc.com 1/1 Running 0 2d13h kube-system kube-controller-manager-k8s1.zhangqiaoc.com 1/1 Running 7 2d13h kube-system kube-proxy-m2wbv 1/1 Running 0 2d13h kube-system kube-proxy-ns78p 1/1 Running 0 2d13h kube-system kube-proxy-x2qdc 1/1 Running 0 2d13h kube-system kube-proxy-xwhfh 1/1 Running 0 2d13h kube-system kube-scheduler-k8s1.zhangqiaoc.com 1/1 Running 9 2d13h ns-zhangqiaoc mysql-client-649f4f447-pdknr 1/1 Running 0 63s <- it is here in ns-zhangqiaoc namespace