Tomcat - Which cipher suites are supported?

For security reason, we need to remove some unsafe cipher suites on Tomcat. And after removing, there are only two cipher suites left: TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA, TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA

After restart, the webpage is not accessible. An error is reported in the catalina.out

Caused by: java.lang.IllegalArgumentException: None of the [ciphers] specified are supported by the SSL engine : [[TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA, TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA]] at org.apache.tomcat.util.net.SSLUtilBase.getEnabled(SSLUtilBase.java:90) at org.apache.tomcat.util.net.SSLUtilBase.(SSLUtilBase.java:64) at org.apache.tomcat.util.net.jsse.JSSEUtil.(JSSEUtil.java:142) at org.apache.tomcat.util.net.jsse.JSSEImplementation.getSSLUtil(JSSEImplementation.java:49) at org.apache.tomcat.util.net.AbstractJsseEndpoint.createSSLContext(AbstractJsseEndpoint.java:102)

The available cipher suites is related with JDK. So, for this one, the environment of Tomcat is

$ java -cp lib/catalina.jar org.apache.catalina.util.ServerInfo

Server version: Apache Tomcat/8.5.20

Server built: Aug 2 2017 21:35:49 UTC

Server number: 8.5.20.0

OS Name: Linux

OS Version: 2.6.32-754.3.5.el6.x86_64

Architecture: amd64

JVM Version: 1.7.0_51-b13

JVM Vendor: Oracle Corporation

The another one which is working is:

$ java -cp lib/catalina.jar org.apache.catalina.util.ServerInfo

Server version: Apache Tomcat/8.5.20

Server built: Aug 2 2017 21:35:49 UTC

Server number: 8.5.20.0

OS Name: Linux

OS Version: 3.10.0-862.14.4.el7.x86_64

Architecture: amd64

JVM Version: 1.7.0_161-b13

JVM Vendor: Oracle Corporation

We can see, this one is 1.7.0_51-b13, another which is working is 1.7.0_161-b13

Using the following java to list the supported cipher suites. https://confluence.atlassian.com/stashkb/list-ciphers-used-by-jvm-679609085.html

import java.util.Iterator;

import java.util.Map;

import java.util.TreeMap;

import javax.net.ssl.SSLServerSocketFactory;

public class Ciphers

{

public static void main(String[] args)

throws Exception

{

SSLServerSocketFactory ssf = (SSLServerSocketFactory)SSLServerSocketFactory.getDefault();

String[] defaultCiphers = ssf.getDefaultCipherSuites();

String[] availableCiphers = ssf.getSupportedCipherSuites();

TreeMap ciphers = new TreeMap();

for(int i=0; i<availableCiphers.length; ++i )

ciphers.put(availableCiphers[i], Boolean.FALSE);

for(int i=0; i<defaultCiphers.length; ++i )

ciphers.put(defaultCiphers[i], Boolean.TRUE);

System.out.println("Default\tCipher");

for(Iterator i = ciphers.entrySet().iterator(); i.hasNext(); ) {

Map.Entry cipher=(Map.Entry)i.next();

if(Boolean.TRUE.equals(cipher.getValue()))

System.out.print('*');

else

System.out.print(' ');

System.out.print('\t');

System.out.println(cipher.getKey());

}

}

}

$ java Ciphers Default Cipher SSL_DHE_DSS_EXPORT_WITH_DES40_CBC_SHA * SSL_DHE_DSS_WITH_3DES_EDE_CBC_SHA SSL_DHE_DSS_WITH_DES_CBC_SHA SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA * SSL_DHE_RSA_WITH_3DES_EDE_CBC_SHA SSL_DHE_RSA_WITH_DES_CBC_SHA SSL_DH_anon_EXPORT_WITH_DES40_CBC_SHA SSL_DH_anon_EXPORT_WITH_RC4_40_MD5 SSL_DH_anon_WITH_3DES_EDE_CBC_SHA SSL_DH_anon_WITH_DES_CBC_SHA SSL_DH_anon_WITH_RC4_128_MD5 SSL_RSA_EXPORT_WITH_DES40_CBC_SHA SSL_RSA_EXPORT_WITH_RC4_40_MD5 * SSL_RSA_WITH_3DES_EDE_CBC_SHA SSL_RSA_WITH_DES_CBC_SHA SSL_RSA_WITH_NULL_MD5 SSL_RSA_WITH_NULL_SHA * SSL_RSA_WITH_RC4_128_MD5 * SSL_RSA_WITH_RC4_128_SHA * TLS_DHE_DSS_WITH_AES_128_CBC_SHA * TLS_DHE_DSS_WITH_AES_128_CBC_SHA256 * TLS_DHE_RSA_WITH_AES_128_CBC_SHA * TLS_DHE_RSA_WITH_AES_128_CBC_SHA256 TLS_DH_anon_WITH_AES_128_CBC_SHA TLS_DH_anon_WITH_AES_128_CBC_SHA256 * TLS_ECDHE_ECDSA_WITH_3DES_EDE_CBC_SHA * TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA * TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256 TLS_ECDHE_ECDSA_WITH_NULL_SHA * TLS_ECDHE_ECDSA_WITH_RC4_128_SHA * TLS_ECDHE_RSA_WITH_3DES_EDE_CBC_SHA * TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA * TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256 TLS_ECDHE_RSA_WITH_NULL_SHA * TLS_ECDHE_RSA_WITH_RC4_128_SHA * TLS_ECDH_ECDSA_WITH_3DES_EDE_CBC_SHA * TLS_ECDH_ECDSA_WITH_AES_128_CBC_SHA * TLS_ECDH_ECDSA_WITH_AES_128_CBC_SHA256 TLS_ECDH_ECDSA_WITH_NULL_SHA * TLS_ECDH_ECDSA_WITH_RC4_128_SHA * TLS_ECDH_RSA_WITH_3DES_EDE_CBC_SHA * TLS_ECDH_RSA_WITH_AES_128_CBC_SHA * TLS_ECDH_RSA_WITH_AES_128_CBC_SHA256 TLS_ECDH_RSA_WITH_NULL_SHA * TLS_ECDH_RSA_WITH_RC4_128_SHA TLS_ECDH_anon_WITH_3DES_EDE_CBC_SHA TLS_ECDH_anon_WITH_AES_128_CBC_SHA TLS_ECDH_anon_WITH_NULL_SHA TLS_ECDH_anon_WITH_RC4_128_SHA * TLS_EMPTY_RENEGOTIATION_INFO_SCSV TLS_KRB5_EXPORT_WITH_DES_CBC_40_MD5 TLS_KRB5_EXPORT_WITH_DES_CBC_40_SHA TLS_KRB5_EXPORT_WITH_RC4_40_MD5 TLS_KRB5_EXPORT_WITH_RC4_40_SHA TLS_KRB5_WITH_3DES_EDE_CBC_MD5 TLS_KRB5_WITH_3DES_EDE_CBC_SHA TLS_KRB5_WITH_DES_CBC_MD5 TLS_KRB5_WITH_DES_CBC_SHA TLS_KRB5_WITH_RC4_128_MD5 TLS_KRB5_WITH_RC4_128_SHA * TLS_RSA_WITH_AES_128_CBC_SHA * TLS_RSA_WITH_AES_128_CBC_SHA256 TLS_RSA_WITH_NULL_SHA256

We can see the cipher suites I want to use are not on the list. Actually, we can add new cipher suites. But because this JDK is too old, we decided to upgrade to OPENJDK 1.8 this time.

NDBCluster - Configuration a private network for Data Nodes

It is recommended to split the Data Nodes from the SQL Nodes and, use a specific network for the intercommunication of Data Nodes.

The way to archive this is to use [TCP] in the cluster configuration.

For example, in my test env, two data node: ndb02 Public: 83.16.16.52 Private: 192.168.123.52 ndb03 Public: 83.16.16.53 Private: 192.168.123.53

In the config.ini:

[ndbd] HostName=ndb02 NodeId=2 [ndbd] HostName=ndb03 NodeId=3

In order to use the private network for the intercommunication in among data nodes, we need to add the following entities in the config.ini.

[TCP] NodeId1=2 NodeId2=3 HostName1=192.168.123.52 HostName2=192.168.123.53

Due to I only have two data nodes, so there is just one pair. If you have more than 2, there should be one [TCP] for each pair.

For example, if there are three data nodes, we need 1 for (1,2), 1 for (1,3) and 1 for (2,3)

The document said that this change only needs Node Restart, but that is Yes and No. One pair of nodes needs to be shutdown at the same time and then, start. So, if replics equal to 2, and the cluster only has two Data Nodes, so, there is only 1 pair, so all Data Nodes have to be shutdown at the same time. If there are four Data Nodes, we can do it for 2 nodes each time. But each time, we can only add one pair in the config.ini, and do Node Restart.

After restart, we can make sure which interface is used for intercommunication:

mysql> select * from ndbinfo.transporters where (node_id = 2 and remote_node_id = 3) or (node_id=3 and remote_node_id=2) \G *************************** 1. row *************************** node_id: 2 remote_node_id: 3 status: CONNECTED remote_address: 192.168.123.53 bytes_sent: 414924 bytes_received: 228560 connect_count: 1 overloaded: 0 overload_count: 0 slowdown: 0 slowdown_count: 0 *************************** 2. row *************************** node_id: 3 remote_node_id: 2 status: CONNECTED remote_address: 192.168.123.52 bytes_sent: 228560 bytes_received: 414924 connect_count: 1 overloaded: 0 overload_count: 0 slowdown: 0 slowdown_count: 0 2 rows in set (0.01 sec)

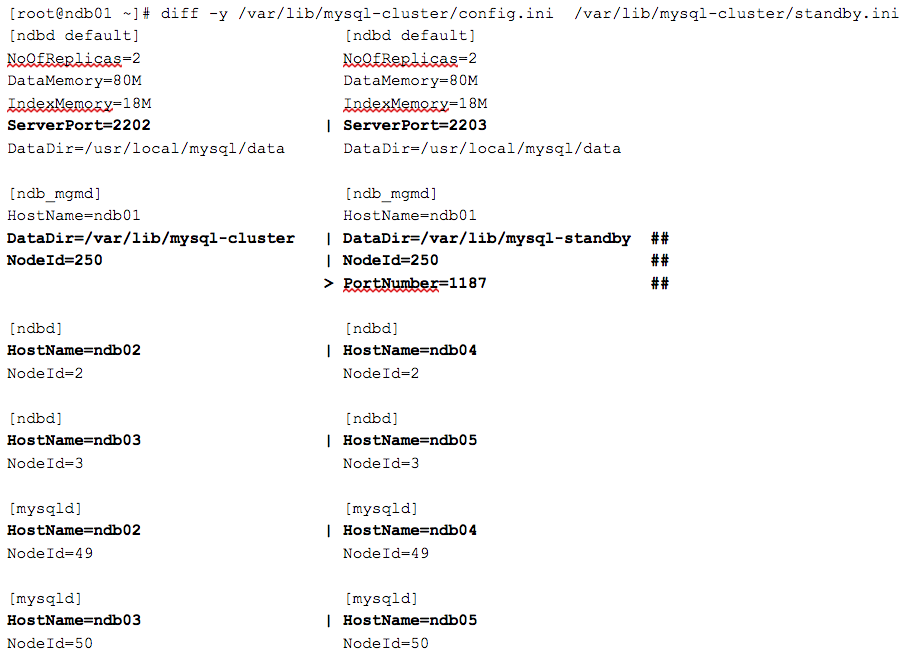

NDBCluster - How to run two ndb_mgmd on the same box?

For the test propose, I want to save some resource, so I decided to start two management processes for PRIMARY and STANDBY clusters on the same machine.

Unfortunately, we cannot just invoke ndb_mgmd with different configuration files twice, because some files on disk will be overwritten.

We have to do the following things:

Two folders for the cache of configuration files

- /usr/local/mysql/mysql-cluster

- /usr/local/mysql/mysql-standby

Two DataDir folders which used for log files and lock files, if using the same NodeId number for both clusters.

- /var/lib/mysql-cluster

- /var/lib/mysql-standby

Two configuration files

- /var/lib/mysql-cluster/config.ini

- /var/lib/mysql-cluster/standby.ini

Change the following parameters for standby cluster.

- DataDir, if using the same NodeId for the management node</li>

- NodeId, if using the same DataDir for the management node</li>

- PortNumber in [ndb_mgmd]</li>

- ServerPort in [ndbd default] if the standby cluster on the same machines with primary cluster.

- The data node / sql node information in [ndbd] and [mysqld]

For my configuration

Then, start the Primary Cluster

/usr/local/mysql/bin/ndb_mgmd -f /var/lib/mysql-cluster/config.ini

Next, the Standby Cluster

/usr/local/mysql/bin/ndb_mgmd -f /var/lib/mysql-cluster/standby.ini --configdir /usr/local/mysql/mysql-standby

NDBCluster - Error 240

MySQL NDBCluster Error 240

Yesterday, an error 240 was reported in our MySQL NDBCluster database which is an very old NDBCluster, version is mysql-5.6.25 ndb-7.4.7

mysql> START TRANSACTION; Query OK, 0 rows affected (0.00 sec) mysql> Delete from table1 where uid=29 and rid in (2,4,5,6,7,8); Query OK, 6 rows affected (0.00 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 5); Query OK, 1 row affected (0.01 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 4); ERROR 1296 (HY000): Got error 240 'Unknown error code' from NDBCLUSTER

Error Code information can be found here

https://dev.mysql.com/doc/ndbapi/en/ndb-error-codes-internal-error.html

Error 240 means “Invalid data encountered during foreign key trigger execution”

I told the coder to rerun the SQL, but the error occurred again.

I did some testing on my own. table1 has two FK, one is to table_u and another is to table_r. If we do not start transaction, and use autocommit=on, there is no error

mysql> Delete from table1 where uid=29 and rid in (2,4,5,6,7,8); Query OK, 6 rows affected (0.01 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 5); Query OK, 1 row affected (0.01 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 4); Query OK, 1 row affected (0.00 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 8); Query OK, 1 row affected (0.00 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 2); Query OK, 1 row affected (0.01 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 7); Query OK, 1 row affected (0.00 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 6); Query OK, 1 row affected (0.00 sec) mysql> commit; Query OK, 0 rows affected (0.00 sec)

And if we disable the check of foreign key in session no error.

mysql> set session foreign_key_checks=0; Query OK, 0 rows affected (0.00 sec) mysql> START TRANSACTION; Query OK, 0 rows affected (0.00 sec) mysql> Delete from table1 where uid=29 and rid in (2,4,5,6,7,8); Query OK, 6 rows affected (0.00 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 5); Query OK, 1 row affected (0.00 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 4); Query OK, 1 row affected (0.01 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 8); Query OK, 1 row affected (0.00 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 2); Query OK, 1 row affected (0.00 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 7); Query OK, 1 row affected (0.00 sec) mysql> INSERT INTO table1 (uid, rid) VALUES (29, 6); Query OK, 1 row affected (0.01 sec) mysql> commit; Query OK, 0 rows affected (0.00 sec) mysql> set session foreign_key_checks=1; Query OK, 0 rows affected (0.00 sec)

I tried to rolling restart the NDB data node, unfortunately, the error is still there. I copied the related table to an new database on the same instance.

mysqldump -uroot -pxxxx --skip-add-drop-table portal table1 table2 table3 |mysql -uroot -pxxxx portal1

And then, do test, there is no error.

So, we copied all database to the new database, the error disappeared.

There are some article online about this error, but no one fits to our situation.

MySQL NDBCluster is the only engine in MySQL that supports FK. It uses trigger internal to achieve its goal.

So, Error 240 means that an error encounters during the FK checking.

Multiple reasons may cause this error, for example, there is no enough memory.

There is no way to know the reason. But I guess there is a logical corruption on the index. I suggest to try the following, if the application can stop:

- Recreate PKs which the FK relay on.

- Recreate FKs on all related tables.

- Shutdown the whole cluster and restart, in the way, indexes will be recreated.

- Backup / Drop / Restore all related tables.

Oracle - Proxy User and VPD

We can use Oracle database to do authentication by using Proxy User.

After authentication, we should have a way to do authorization.

Unfortunately, the proxy user can do any SELECT and DML on the objects of application user directly without any restriction.

Proxy User can be granted a special role, but, the privileges on the role can just be on the objects of other schemas or system privileges. So at this time, we need to use VPD to archive our goal.

The following is a demo, which is using HR example schema.

If you don’t have HR schema, go Github to clone:

$ git clone --recursive https://github.com/oracle/oracle-db-examples.git

First, we need to create database user, for each user in HR.EMPLOYEES

select 'create user '||email||' identified by '||email||';' from hr.employees;

Second, each user can be working as a proxy user to access the data in HR. No specific role or premission needs to be granted to proxy user.

select 'alter user hr grant connect through '||email||';' from hr.employees;

Now, we can do test:

$ sqlplus abull[hr]/ABULL@127.0.0.1/hr

SQL*Plus: Release 18.0.0.0.0 - Production on Sun Dec 16 20:40:13 2018

Version 18.3.0.0.0

Copyright (c) 1982, 2018, Oracle. All rights reserved.

Last Successful login time: Sun Dec 16 2018 08:32:00 -05:00

Connected to:

Oracle Database 18c Enterprise Edition Release 18.0.0.0.0 - Production

Version 18.3.0.0.0

SQL> select count(*) from hr.employees;

COUNT(*)

----------

107

Next, I want to impose some rules on each user, to restrict them that they can only access themselves and their subordinate in HR.EMPLOYEES and HR.JOB_HISTORY.

For example:

- Steven King is the boss of the company, so he can access the record of everyone.

- Neena Kochhar is a supervisor, he can access the records of his subordinate.

- Alexis Bull is only an employees, so he can only access the records himself.

First, we have to create an VPD admin user and a context

CREATE OR REPLACE CONTEXT hr_ctx using hr_package;

Second, due to VPD cannot support CONNECT BY clause, and also, I want to have a VPD policy on EMPLOYEES table which is the source I need to use to initial the context. VPD has the problems for this recursive invoking also, so I replicate HR.EMPLOYEES to vpd_admin user by using mview.

-- SYS, create VPD_ADMIN user for management

create user vpd_admin identified by vpd_admin;

grant unlimited tablespace to vpd_admin;

grant create session, resource to vpd_admin;

grant create any context to vpd_admin;

grant all on hr.employees to vpd_admin;

grant all on hr.MLOG$_EMPLOYEES to vpd_admin;

grant create materialized view to vpd_admin;

grant execute on DBMS_RLS to vpd_admin;

-- VPD_ADMIN, create a context

sqlplus vpd_admin/vpd_admin@127.0.0.1/hr

CREATE OR REPLACE CONTEXT hr_ctx using hr_package;

-- HR, create mview log for replication

create materialized view log on employees with primary key;

-- VPD_ADMIN, create mview log. The application user cannot access this tables, so, they cannot get all data of employees.

create materialized view hr_employees refresh fast as select * from hr.employees;

-- create HR_PACKAGE to set CONTEXT and for VPD

CREATE OR REPLACE PACKAGE hr_package IS

PROCEDURE set_hr_context;

FUNCTION get_hr_context return varchar;

FUNCTION vpd_control(schema_p in varchar2, table_p in varchar2) return varchar2;

type NUMBER_ARRAY is table of number;

Function get_subordinate_emp_id return NUMBER_ARRAY PIPELINED;

type employees_type is record(email varchar2(25), emp_id number(6));

type employees_table is table of employees_type;

END;

/

CREATE OR REPLACE PACKAGE BODY hr_package AS

-- set EMP_ID in HR_CTX. If login user is SYS/SYSTEM/HR, set to 0, if not the application user, set to -1

PROCEDURE set_hr_context

IS

chk_cnt number;

emp_id number;

BEGIN

select count(*) into chk_cnt from hr_employees where email = SYS_CONTEXT('USERENV','PROXY_USER');

if chk_cnt > 0 then

select employee_id into emp_id

from hr_employees where email = SYS_CONTEXT('USERENV','PROXY_USER');

DBMS_SESSION.SET_CONTEXT('hr_ctx','EMP_ID',emp_id);

elsif SYS_CONTEXT('USERENV','SESSION_USER') in ('SYS','SYSTEM','HR') then

DBMS_SESSION.SET_CONTEXT('hr_ctx','EMP_ID',0);

else

DBMS_SESSION.SET_CONTEXT('hr_ctx','EMP_ID',-1);

end if;

END;

-- this is a table function, used for VPD

Function get_subordinate_emp_id

return NUMBER_ARRAY

PIPELINED

is

cursor c1 is

select employee_id from hr_employees

connect by manager_id = prior employee_id

start with employee_id = SYS_CONTEXT('hr_ctx','EMP_ID');

emp_id number;

begin

open c1;

loop

fetch c1 into emp_id;

exit when c1%notfound;

pipe row(emp_id);

end loop;

end;

FUNCTION get_hr_context

return varchar

IS

curr_context varchar2(2000);

begin

SELECT 'EMP_ID:'||SYS_CONTEXT ('hr_ctx', 'EMP_ID') into curr_context FROM DUAL;

return curr_context;

end;

-- for VPD

-- if the login user is SYSTEM/SYS/HR, then it can access all data.

-- if the login user is an application user, it can access the data they are allowed to access.

-- if the login user is not anyone above, it cannot access any data

FUNCTION vpd_control(schema_p in varchar2, table_p in varchar2)

return varchar2

is

emp_id number;

pred varchar2(400);

begin

if schema_p = 'HR' then

emp_id := SYS_CONTEXT('hr_ctx','EMP_ID');

if emp_id < 0 then

pred := ' 1 = 2 ';

elsif emp_id = 0 then

pred := '';

else

--if table_p = 'EMPLOYEES' then

-- pred := 'employee_id in (select COLUMN_VALUE from table(system.hr_package.get_subordinate_emp_id))';

--elsif table_p = 'JOB_HISTORY' then

-- pred := 'employee_id in (select COLUMN_VALUE from table(system.hr_package.get_subordinate_emp_id))';

--end if;

pred := 'employee_id in (select COLUMN_VALUE from table(vpd_admin.hr_package.get_subordinate_emp_id))';

end if;

else

pred := ' 1 = 2 ';

end if;

return pred;

end;

end;

/

grant execute on vpd_admin.hr_package to hr;

Next, before we create the VPD policy, we do test in advance.

$ sqlplus abull[hr]/ABULL@127.0.0.1/hr

-- the normal user cannot call SET_CONTEXT to change the EMP_ID to elevate the permission

SQL> exec DBMS_SESSION.SET_CONTEXT('hr_ctx','EMP_ID',11111);

BEGIN DBMS_SESSION.SET_CONTEXT('hr_ctx','EMP_ID',11111); END;

*

ERROR at line 1:

ORA-01031: insufficient privileges

ORA-06512: at "SYS.DBMS_SESSION", line 152

ORA-06512: at line 1

SQL>desc vpd_admin.hr_employees

ERROR:

ORA-04043: object vpd_admin.hr_employees does not exist

SQL> exec vpd_admin.hr_package.set_hr_context;

PL/SQL procedure successfully completed.

SQL> select vpd_admin.hr_package.get_hr_context from dual;

GET_HR_CONTEXT

--------------------------------------------------------------------------------

EMP_ID:185

SQL> select vpd_admin.hr_package.vpd_control('HR','EMPLOYEES') from dual;

VPD_ADMIN.HR_PACKAGE.VPD_CONTROL('HR','EMPLOYEES')

--------------------------------------------------------------------------------

employee_id in (select COLUMN_VALUE from table(system.hr_package.get_subordinate

_emp_id))

SQL> select count(*) from table(vpd_admin.hr_package.get_subordinate_emp_id);

COUNT(*)

----------

1

$ sqlplus system/oracle@127.0.0.1/hr

SQL> exec vpd_admin.hr_package.set_hr_context;

PL/SQL procedure successfully completed.

SQL> select vpd_admin.hr_package.get_hr_context from dual;

GET_HR_CONTEXT

--------------------------------------------------------------------------------

EMP_ID:0

SQL> select vpd_admin.hr_package.vpd_control('HR','EMPLOYEES') from dual;

VPD_ADMIN.HR_PACKAGE.VPD_CONTROL('HR','EMPLOYEES')

--------------------------------------------------------------------------------

Next, we can add VPD policy:

BEGIN DBMS_RLS.ADD_POLICY ( object_schema => 'HR', object_name => 'EMPLOYEES', policy_name => 'HR_EMPLOYEES_POLICY', function_schema => 'VPD_ADMIN', policy_function => 'HR_PACKAGE.vpd_control', -- statement_types => 'select', policy_type => DBMS_RLS.CONTEXT_SENSITIVE); END; / BEGIN DBMS_RLS.ADD_POLICY ( object_schema => 'HR', object_name => 'JOB_HISTORY', policy_name => 'HR_JOB_HISTORY_POLICY', function_schema => 'VPD_ADMIN', policy_function => 'HR_PACKAGE.vpd_control', -- statement_types => 'select', policy_type => DBMS_RLS.CONTEXT_SENSITIVE); END; /

Then, do some test

-- SYSTEM, can see all data.

SQL> exec vpd_admin.hr_package.set_hr_context;

PL/SQL procedure successfully completed.

SQL> select count(*) from hr.employees;

COUNT(*)

----------

107

SQL> select count(*) from hr.job_history;

COUNT(*)

----------

10

-- HR, can see all data

SQL> select count(*) from employees;

COUNT(*)

----------

107

SQL> select count(*) from job_history;

COUNT(*)

----------

10

-- SKING, BOSS, can see all data

SQL> select count(*) from employees;

COUNT(*)

----------

107

SQL> select count(*) from job_history;

COUNT(*)

----------

10

-- NKochhar, a supervisor, can only see the records he is allowed

SQL> select count(*) from employees;

COUNT(*)

----------

12

SQL> select count(*) from job_history;

COUNT(*)

----------

4

-- ABULL, an employee, can only see himself.

SQL> select count(*) from employees;

COUNT(*)

----------

1

SQL> select count(*) from job_history;

COUNT(*)

----------

0

Finally, we need to have a way to set CONTEXT automatically, we can use logon trigger.

-- SYS

CREATE OR REPLACE TRIGGER trig_set_client_info

AFTER LOGON ON database

DECLARE

BEGIN

DBMS_SESSION.SET_IDENTIFIER(SYS_CONTEXT('USERENV','PROXY_USER'));

vpd_admin.hr_package.set_hr_context;

END;

/